In 2008 the Defense Advanced Research Projects Agency, or DARPA, released a report entitled “ExaScale Computing Study: Technology Challenges in Achieving ExaScale Systems.” This report outlined several obstacles in achieving the next three orders of magnitude performance increase beyond petascale systems. At that time Sandia developed a strategy and infrastructure for doing hardware/software co-design, which is a process for exploring hardware design tradeoffs and the necessary changes to algorithms, applications and system software to motivate and exploit new hardware capabilities. As the focus of the HPC community shifted toward addressing the many challenges in providing a usable exascale system for the Department of Energy, or DOE, one of the primary goals for Sandia’s co-design work was to put a “Sandia Inside” logo on a DOE exascale system. Playing off the popular “Intel Inside” marketing campaign, the ultimate objective was to have Sandia’s influence on a DOE exascale system be clearly evident.

The Frontier system at Oak Ridge National Laboratory, from supercomputer vendor Hewlett Packard Enterprise, or HPE, became the first machine to break the exaflops barrier on the Top500 benchmark in June 2023, and two of Sandia’s co-design technologies were instrumental in designing the Slingshot-11 high-performance interconnect, which began initially at Cray, Inc. and continued at HPE when they acquired Cray in 2019. Sandia’s Portals 4 network programming interface and Structural Simulation Toolkit, or SST, both played a key role in the development of the HPE’s Slingshot-11 fabric, which is composed of two hardware components, the network interface controller, or NIC, called Cassini and the network switch called Rosetta. Frontier is the first DOE exascale system to be deployed, but the next two DOE exascale systems, El Capitan at Lawrence Livermore National Laboratory and Aurora at Argonne National Laboratory, will also use the Slingshot-11 network, so all three DOE exascale systems will have “Sandia Inside.”

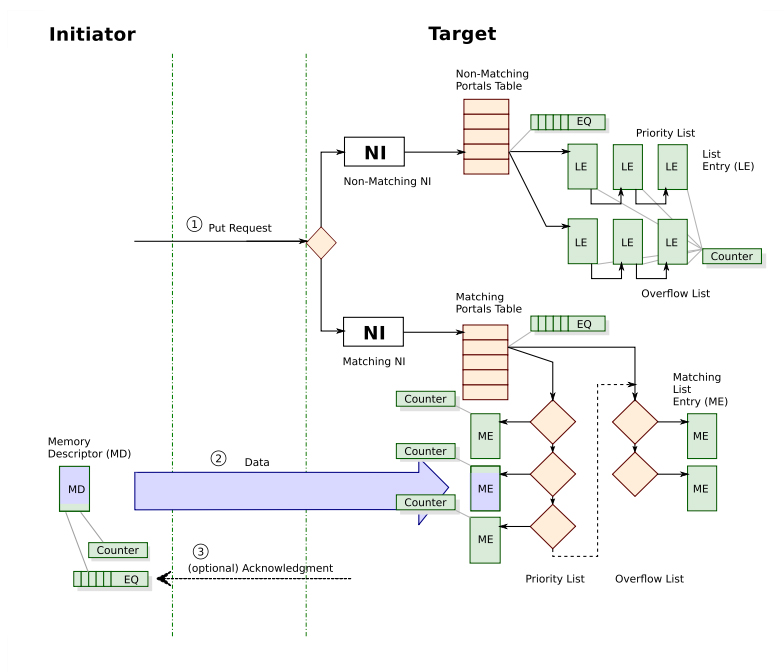

The Portals 4 network programming interface was specifically designed to enable co-design and drive the development of network hardware to meet the stringent performance and scalability requirements of applications and services running on today’s HPC platforms. The network of an HPC machine has stringent performance and scalability requirements that are unique to scientific computing applications and system services. For HPC systems, the interconnection network determines how large the system can grow and how fast applications that span the machine can execute.

Prior versions of the Portals network programming interface developed primarily by Sandia were simply portability layers that translated low-level network functionality to meet the needs of HPC applications and system services. However, Portals 4 encapsulates the capabilities needed by all HPC applications and system services in a way that allows NIC designers to implement these capabilities efficiently in hardware, reducing the semantic gap between what the hardware provides and what the software needs. Unlike other low-level network programming interfaces, the focus of Portals 4 is not on a software implementation, but rather on a detailed specification of objects and functions. This approach allows hardware architects the freedom to continue to innovate but does so in a way that reduces the need for software portability layers.

“Portals 4 provided inspiration for the Cassini line of NICs in the HPE Cray Slingshot network, the interconnect enabling the first exascale system on the Top500 list. As a network Application Programming Interface, Portals 4 does not specify a network interface architecture or a network transport protocol; rather, it provides insight into how to build a network transport for high performance computing,” HPE Vice President and General Manager Mike Vildibill said. “The visionary work done by the Portals team significantly accelerated the architecture definition phase of Cassini.”

In addition to HPE, several vendors have designed and deployed network hardware based on Portals 4. The Bull eXascale Interconnect (BXI, BXI-2 and BXI-3) networks produced by European supercomputing vendor Atos are also based on Portals 4. Portals 4 is also a key driver in the development of Intel’s OmniPath Architecture, which was recently taken over by Cornelis Networks as OmniPath Express.

Additionally, Portals 4 has influenced software design. The Open Fabrics Interface is an extension of Portals 4 that Intel spearheaded to help transition the deployment of their OmniPath Architecture series of HPC networks from their initial product based on InfiniBand exposing the Verbs interface to later generations based on Portals 4.

“In my previous role as Fellow & Chief Technologist for at Intel, the Portals 4 specification was a key technology that informed and guided the evolution of Intel’s OmniPath Architecture,” Chief Technologist for HPC at Google Cloud, Bill Magro, said. “Further, Portals 4 was very influential in the design of the network interface hardware and the semantics of the Open Fabrics Interface API, or OFI. OFI was created with two goals in mind: first, create a much-improved semantic match between the needs of HPC and Machine Learning application software and, second, create a flexible and extensible interface to unlock faster innovation and greater competition in the interconnect fabric space. OFI has been very successful in both of these regards, as it has seen widespread adoption by multiple network vendors. Portals 4 was a critical proof point that the industry could develop tailored networking technology while maintaining a vendor-neutral software interface, and it is arguably the most successful hardware/software co-design tool for high performance networking I encountered during my years of technical leadership.”

OFI has seen widespread commercial adoption by several vendors, including Amazon’s Elastic Fabric Adapter. Portals 4 has also been extremely influential in the supercomputing research community, spurring further explorations in network software and hardware design for cloud and data center computing.

Sandia’s SST also played an important role in the development of the Slingshot-11 network. SST is a parallel discrete event simulation framework that simulates the low-level interactions of computing devices at the architectural level. SST has a modular design that enables extensive exploration of individual components, such as memory configurations and processor instructions, without intrusive changes to the simulator. In particular, it allows vendors to easily plug their own device simulators into the framework. Since SST is parallel, it is able to run complex simulations more quickly. SST was used extensively by many component and system vendors, including Cray, for design-space exploration as part of the PathForward program under the DOE Exascale Computing Project.

HPE Senior Distinguished Technologist Duncan Roweth explains, “Cray had always performed extensive system scale simulation using home grown tools. As the Slingshot project started, the Cray team decide to adopt an open-source simulator. We selected SST for two main reasons. Firstly, we knew that we would need to scale to large system sizes, thousands of switches with tens of thousands of endpoints or NICs. Secondly, the DOE Labs community was developing motifs and miniApps that characterized their applications and integrating them into SST. The Cray team worked with developers at Sandia introducing a device interface that allowed for interchange of open source and proprietary device models. Cray implemented models of the Rosetta switch and Cassini NIC that used this interface. The resulting devices are being used in all three of the US exascale systems. We regard the work on SST that Cray undertook with Sandia as an excellent example of vendor-labs codesign, one that we will build upon in future projects.”

Sandia’s efforts in developing co-design tools, such as Portals 4 and SST, using those tools to explore the impact and interaction of hardware and software and partnering with HPC system and component vendors like HPE to transition design concepts into reality have seen many successes. While the HPE logo will eventually appear on all three DOE exascale machines, all three could just as easily display the “Sandia Inside” logo too.