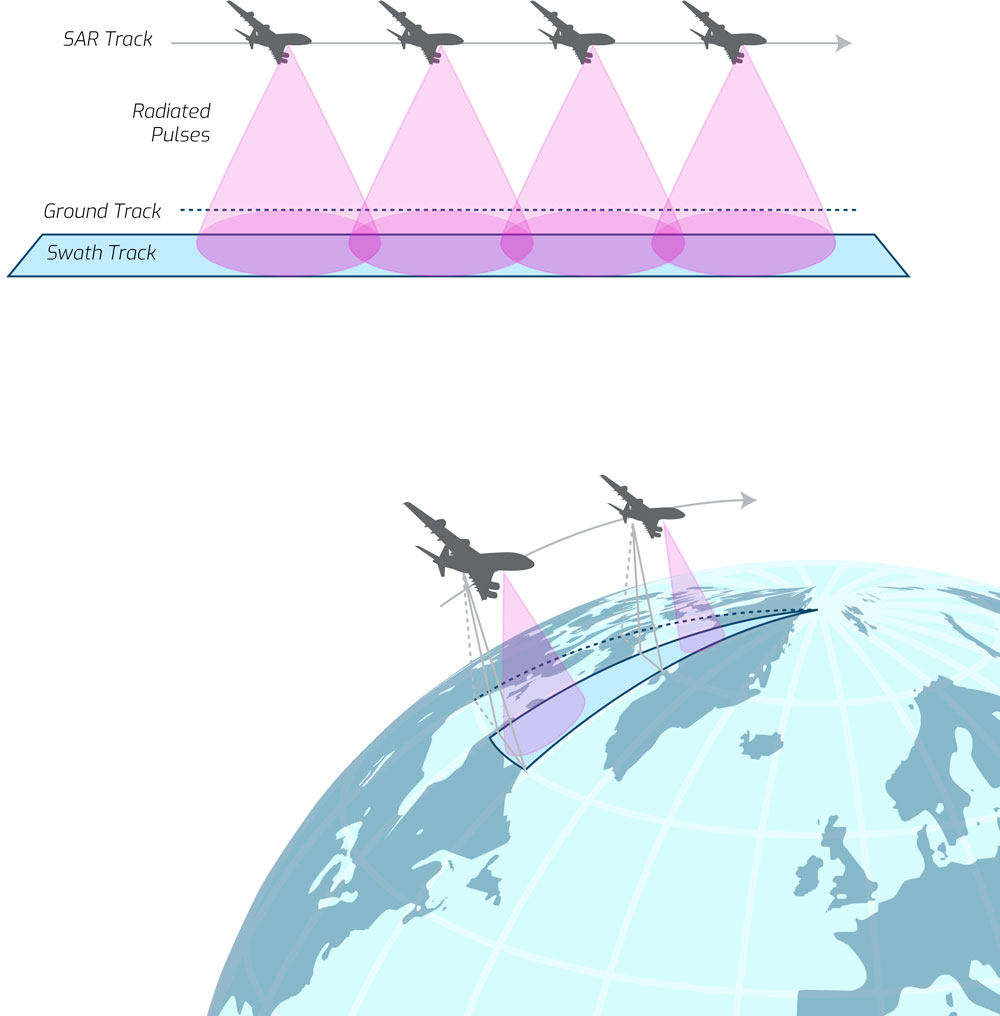

Radar bounces radio waves off distant objects and uses the reflected radio waves to display those objects on a screen, enabling us to perceive things normally beyond our sight. The larger the antenna dish receiving the reflected radio waves, the more details we can discern from the target. Synthetic Aperture Radar, or SAR, creates the equivalent of a huge receiving antenna dish by tasking orbiting satellites or a radar attached to a moving airplane to take multiple radar images of a target from different angles (see Figure 1). The controlled movement of the satellite or airplane enables a series of precise images to be captured and then integrated into a single high-resolution image of the target.

However, gathering the data is only the beginning of the work: it also must be analyzed and interpreted. Since high-resolution radar images have so much data to interpret, automating SAR data analysis and interpretation has been a challenge ever since SAR was initially developed in the 1950s. The early attempts to automatically analyze and interpret SAR data with DNNs in the 1980s, 1990s and later did not work well. Although development of DNNs began in the 1970s, they became more powerful and high-performing in 2012, ushering in a multitude of neural network advances. A shortcoming of more recent DNN SAR research has been the focus on only a few questions or variables.

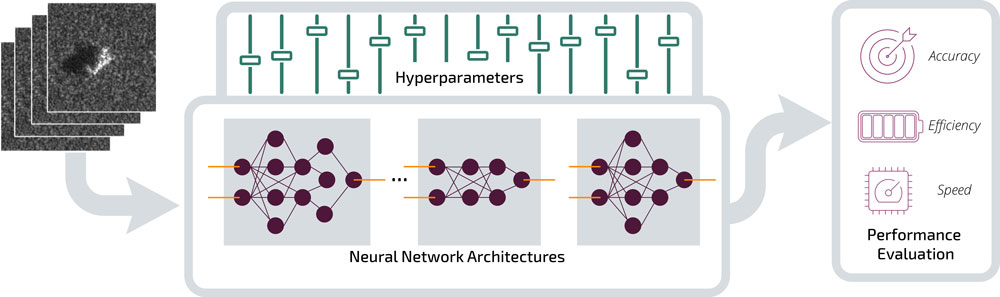

Sandia researchers wanted to dig deep and broad in assessing DNNs for SAR automatic target recognition. They used HPC to evaluate thousands of deep neural networks to assess different approaches to automatic target recognition of SAR images. The team focused on assessing multiple DNN architectures for accuracy and speed (see Figure 2), then used data augmentation to close the gap between training datasets and current real-world scenarios, making the DNN results far more accurate.

The Sandia team rigorously applied an extensive analysis exploring the various DNN approaches that have advanced over the last decade. In doing so, they investigated whether neural networks could perform well and what computational structures enabled high accuracy. They investigated deeper/bigger networks, wider networks, sophisticated connections between neural network layers, various optimization methods and more. This involved training many thousands of networks and attaining a state-of-the-art benchmark for accuracy.

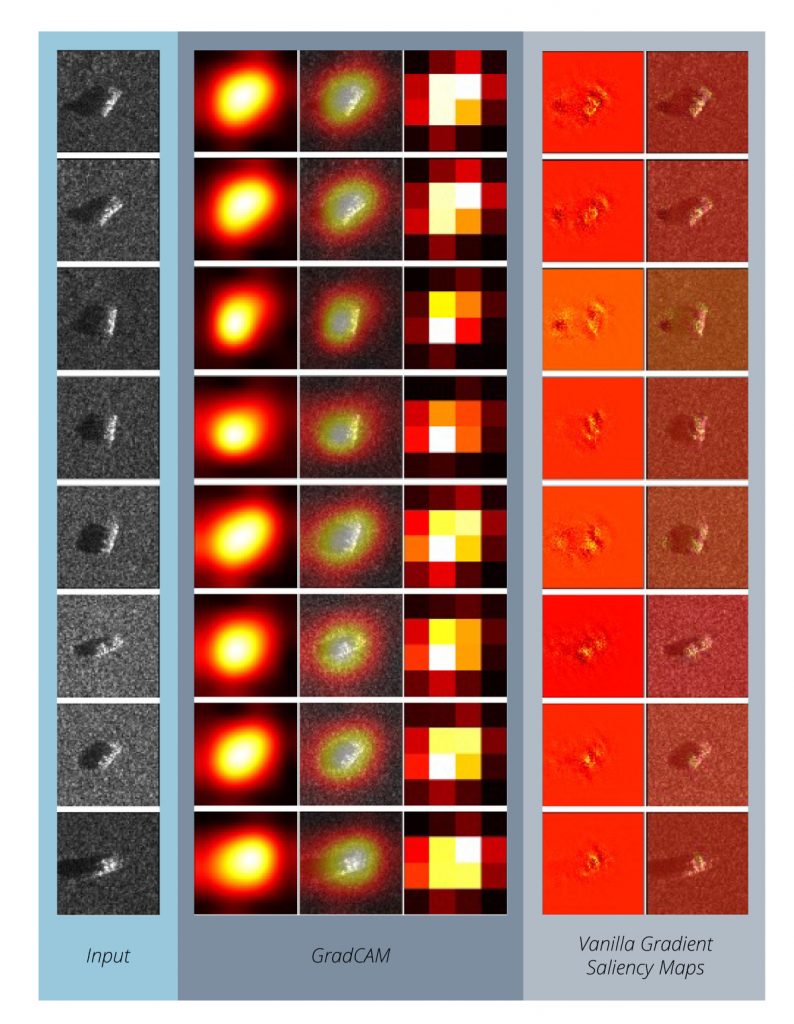

Beyond looking at accuracy, the team wanted to learn why the neural networks are making their decisions and so they integrated model explainability methods. To investigate this further, they developed heatmap images showing the parts of the input image that potentially influence the DNN’s decision making (see Figure 3). For example, is the DNN making decisions based upon the shadow of a vehicle, the target itself, the background or something else?

Another important area of investigation was reproducibility. Can a given DNN’s performance be reproduced? Is one particular computational structure of the DNN actually better than another, or did that training instance just happen to work out better one time? The team investigated this question thoroughly, which contributed to their understanding of how these facets of DNNs apply to SAR automatic target recognition.

The team’s extensive model analysis, application of augmentations, reproducibility studies and work on explainability together are building a deep understanding of using neural network approaches for SAR automatic target recognition.