Traditional HPC has evolved into a broad array of architectures to meet a growing range of computing needs and workload. New technologies continue to deliver unprecedented performance in areas such as machine learning, biologically inspired computing, and quantum processing. Early investigation of how predictive simulation computational designs need to evolve to leverage the benefits of these architectures will help drive technology decisions at the national labs. By exploring these diverse computational methodologies to harness increased simulation fidelity, scalability and performance, Sandia is revolutionizing the national security mission space.

Sandia continues to be at the forefront of technology investigation through its acquisitions of new testbed systems. The following are some of the systems procured and managed in a partnership between Sandia’s Extreme Scale Computing and IT Infrastructure Services groups.Sandia’s new Fujitsu system is the first in DOE, and one of the first systems in the world, with A64FX processors. This new system couples ARM processors, wide vector units using Scalable Vector Extensions, and on-package High Bandwidth Memory (HBM) which provides more than double the memory bandwidth of traditional technologies. This system could benefit algorithms that do not perform well on GPUs.

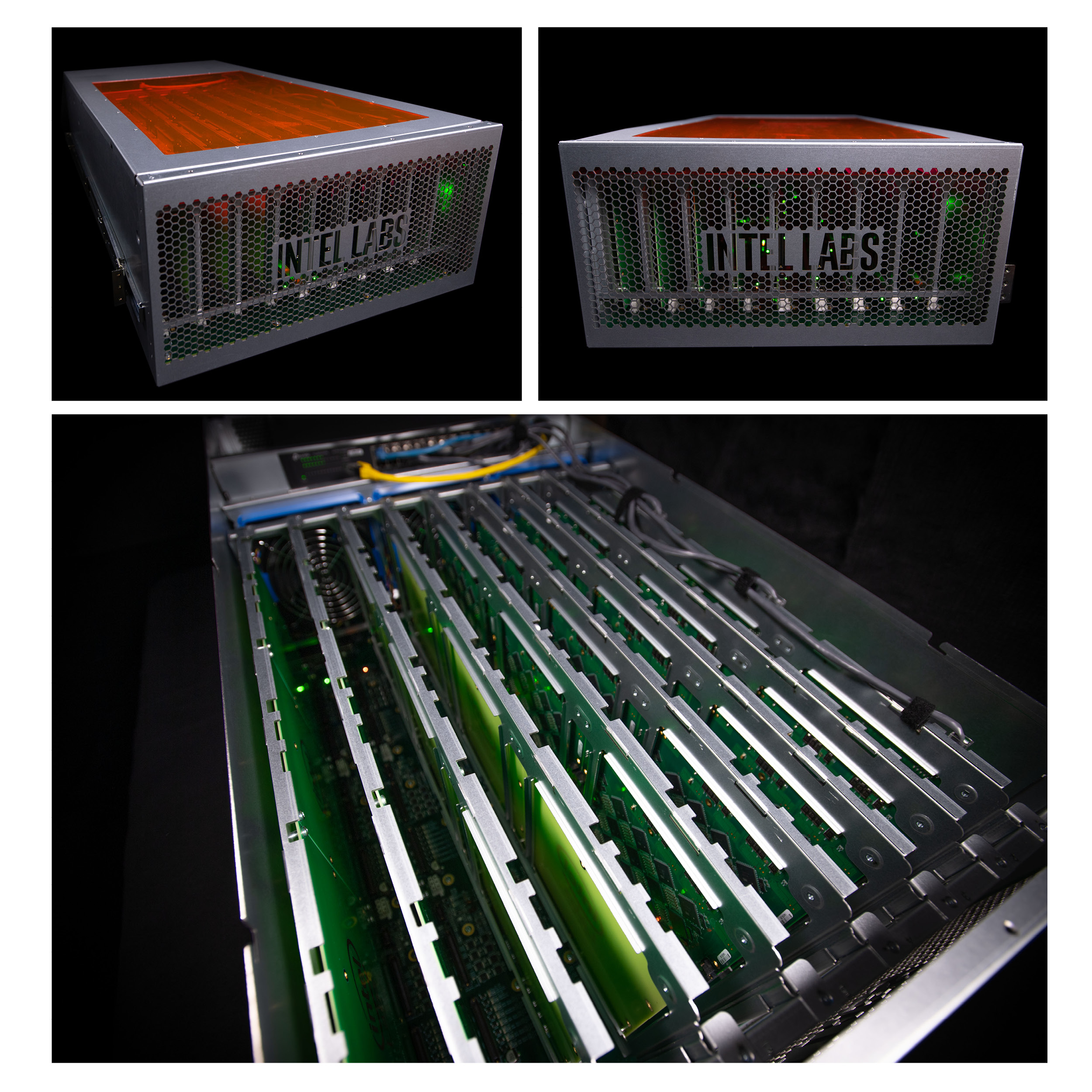

Sandia acquired a large-scale spiking neuromorphic testbed to explore novel neural-inspired approaches to computation. As the first testbed in a multi-year partnership with Intel, the 50 million neuron coupled with a 50 billion synapse Loihi system is one of the five largest spiking neuromorphic platforms in the world. This capability will allow researchers to investigate how scale can enable a variety of applications and simulations. This novel approach to computation in systems like Loihi employs event—driven computation, thereby offering greater energy efficiency.

Purpose built for machine learning, Sandia’s Graphcore system is an artificial intelligence targeted system with a unique chip called an Intelligence Processing Unit (IPU). The IPU employs a much larger core count and access to more memory than central processing units or GPUs. This capability will enable neural networks for a variety of tasks such as image processing, neural architecture search, scientific computing, and natural language processing.

The recent upgrade of the Cray compass software collaboration platform to the new HPE/Cray Shasta architecture incorporates a next-generation high-speed network interconnect with features that can mitigate message contention and congestion. This result was made possible by DOE investments in its Exascale Path Forward program. In addition, this upgrade will include new processors from AMD. AMD was recently selected as the technology provider for many leadership class platforms in the DOE complex.

Demand for leadership class computing cycles continues to grow. NNSA’s largest current system, Sierra, deployed at LLNL, offers access to both high-performance CPU and GPU components, with the links to the most appropriate hardware devices for each type of physics or algorithm being used. In response to the increased demand and requirements for code porting, optimization and initial science runs, local testbed versions of Sierra were expanded by 33%.