The CaaS journey at Sandia

The Accelerated Digital Engineering (ADE) initiative at Sandia is pioneering the creation of radically streamlined and highly interactive user interfaces to advance modeling and simulation capabilities. The goal is to improve end-user productivity and increase the impact on early design and surveillance activities. Underpinning ADE is a Computing-as-a-Service (CaaS) layer that integrates cloud and HPC technologies to support the deployment of interactive, user-facing services and the orchestration of back-end computing jobs running across Sandia’s computing resources. Realizing this vision required an interdisciplinary team of simulation, user experience and computing infrastructure experts working across organizational boundaries to push computing at Sandia forward.

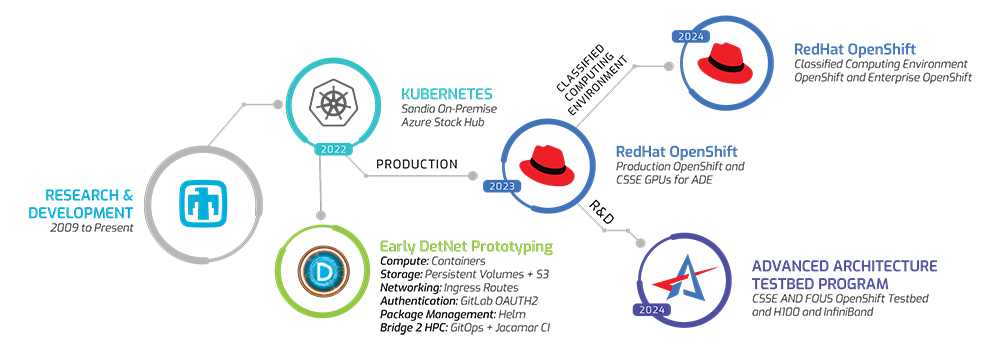

Figure 1 provides a high-level view of how CaaS infrastructure has evolved over the past several years. Early research explored cloud technologies such as virtualization and containers. In 2022, an on-premises deployment of Microsoft’s Azure platform was leveraged to explore the use of Kubernetes (K8s) a cloud operating system for containerized applications and services, used to deploy ADE exemplar applications.

The team collaborated with the ADE DetNet team to explore how to provision computing, storage and networking resources on the K8s platform and link to Sandia’s existing HPC infrastructure. A novel scheme leveraging GitLab Jacamar runners was used to enable front-end applications running on K8s to link to and manage jobs running on HPC systems, which is a technology developed by the Exascale Computing Project (see Figure 1). Throughout 2022 and 2023, K8s usage migrated to production instances of the RedHat OpenShift Container Platform (OCP), a commercial version of K8s, running on Sandia’s restricted and classified computing environments. These production systems were augmented with additional computing resources, including servers and graphics processing units (GPUs) to support ADE CaaS workloads.

The role of R&D on MPI, InfiniBand, CaaS API and multi-cluster federation

Recent R&D efforts at Sandia have led to the deployment of an OpenShift testbed designed to support exploratory activities that are challenging to execute in production environments. This initiative is part of a broader commitment to enhancing computing capabilities on cloud-based platforms. The Sandia team is adding Message Passing Interface (MPI) and InfiniBand (IB), to K8s to run HPC jobs, while investigating Kubernetes-like Control Planes (KCP) to enable routing jobs seamlessly to both K8s and HPC clusters. These endeavors are crucial for investigating the integration of cloud computing with HPC, aiming to create a seamless convergence that can eventually be applied to production environments. The techniques and technologies developed within the OpenShift R&D testbed are intended to be transitioned to production once successful, benefiting ADE workloads and end-users. Current approaches involve leveraging K8s operators to facilitate some of these advanced capabilities. These operators, developed by the Cloud Native Foundation or major industry vendors, provide a foundational framework for Sandia’s in-house research, testing and development processes.

The CaaS team has successfully executed multi-node MPI jobs within the elevated security architecture of the OpenShift Cluster (OC) thereby enabling scalable ADE workflows on the platform. In addition, an effort to enable IB support aims to enhance node and core affinity for application deployment, utilizing OC as their backend.

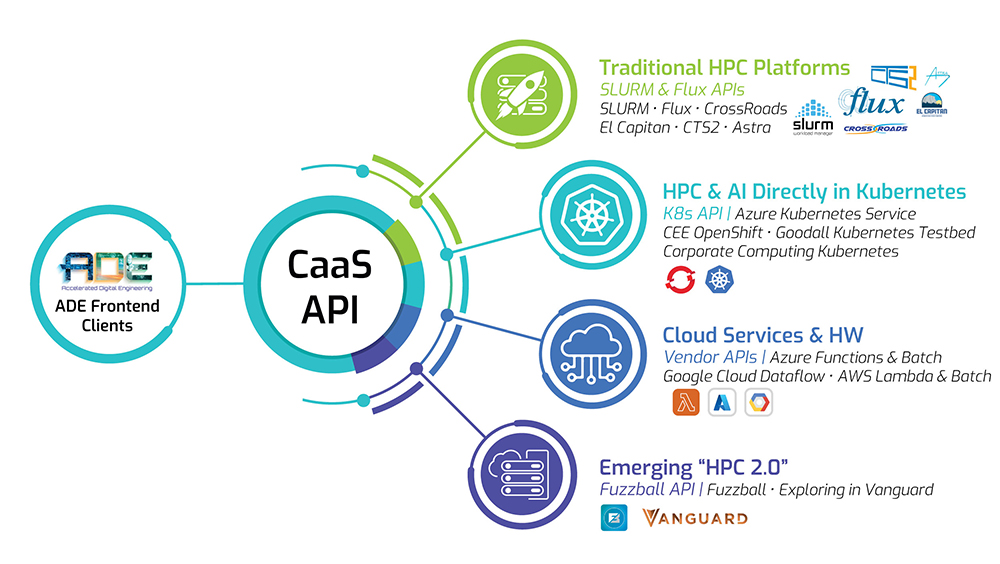

Figure 2 illustrates the CaaS Application Programming Interface (API) as a federation layer designed to communicate with the underlying computing infrastructure at Sandia. Additionally, the CaaS team has initiated R&D efforts to enable use of KCP as a federation layer. The KCP initiative is developing a unified back-end control plane that effortlessly merges traditional and modern HPC environments, cloud-based infrastructures like K8s and OpenShift services from major cloud vendors, and emerging workflow managers such as Fuzzball. This integration is facilitated through an API-based approach, aiming to improve the way developers interact with HPC systems and how they utilize them in their workflows.

Exploring K8s for AI services

The OCP infrastructure at Sandia has proven to be an asset for deploying Artificial Intelligence (AI) services efficiently. By leveraging OCP, Sandia has been able to harness the power of containerization and orchestration to streamline the deployment, scaling and management of AI and Machine Learning (ML) workloads. This approach not only capitalizes on OCP’s inherent strengths in performance, reliability and scalability, but also benefits from its elasticity and flexibility, making it a well-suited platform for the dynamic requirements of AI services. Today, Sandia is positioned to deliver AI services and continues to move ADE forward to fulfill mission demands more efficiently.

Sandia’s future posture for adopting cloud technologies

Sandia’s CaaS team is working to integrate new cloud technologies (containers, K8s, Fuzzball, etc.) with our traditional HPC environment to modernize and improve these technologies. Our goal is also to leverage the improved computing environment to explore delivering ASC modeling and simulation capabilities as turnkey services to end-users, making them more accessible and easier to use.

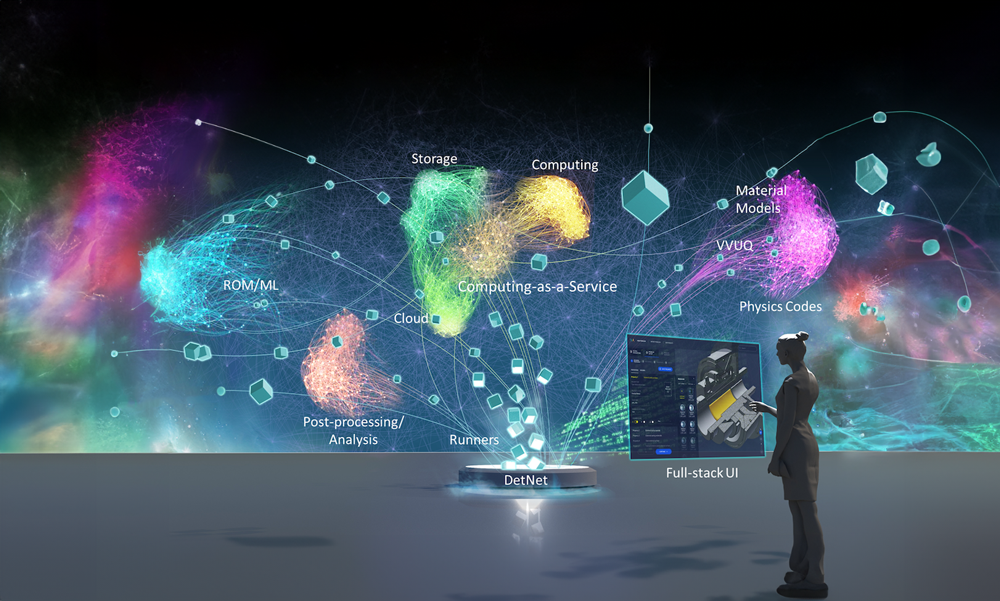

Figure 3 shows a graphic artist interpretation of a complex digital ecosystem created approximately four years ago. At that point, DetNet and other ADE applications like it were simply a vision. Four years later, Sandia’s CaaS team has realized this vision through collaboration with the integrated codes teams, web application and user experience experts and CaaS infrastructure.

Beyond 2025, Sandia aims to maintain its forward-looking posture by actively participating in holistic co-design efforts with cloud technologies. These efforts aim to provide critical insights to enhance the understanding of HPC requirements and increase Sandia’s impact in the larger computing community.