As the demands on computers rapidly change to more data-centric tasks — such as image processing, voice recognition or autonomous driving — the need for more efficient computing grows just as rapidly.

Given the limitations of traditional computing, scientists and commercial manufacturers have focused on the field of neuromorphic computing, which mimics the way the human brain carries out data-centric tasks.

Sandia researchers have collaborated with Stanford University and University of Massachusetts, Amherst, to address the challenges and recently have made breakthroughs in neuromorphic computing and the broader fields of organic electronics and solid-state electrochemistry.

This work, published last month in Science, introduces a novel approach to parallel programming of an ionic floating-gate memory array, which allows processing of large amounts of information simultaneously in a single operation. The research is inspired by the human brain, where neurons and synapses are connected in a dense matrix and where information is processed and stored at the same location.

Sandia researchers used parallel computing to demonstrate how to adjust the strength of the synaptic connections in the array so that computers can learn and process information at the point it is sensed without transferring it to the cloud. This greatly improves speed and efficiency and reduces power use.

Through machine learning technology, mainstream digital applications can recognize and understand complex patterns in data. For example, virtual assistants such as Amazon’s Alexa or Apple’s Siri sort through large streams of data to understand voice commands and improve over time.

With the dramatic recent expansion of machine learning algorithms, applications now demand much more data storage and power to complete these difficult tasks. Traditional digital computing architecture isn’t designed or optimized for artificial neural networks that are essential to machine learning.

To further compound the problem, conventional semiconductor fabrication technology has reached its physical limits. Chips simply cannot be shrunk further to meet the demand for energy efficiency.

With conventional computer chips, information is stored in memory with high precision but has to be shuttled through a bus to a processor to execute tasks, causing delays and excess energy consumption.

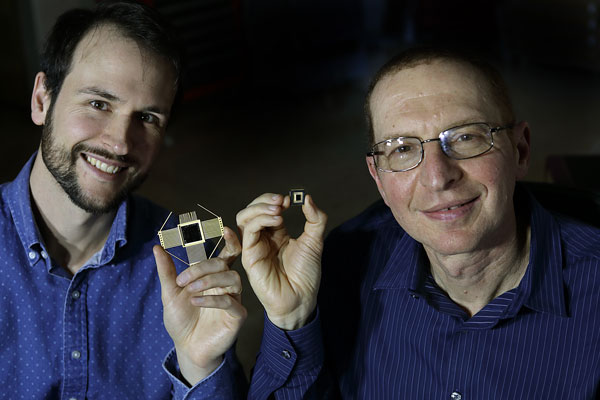

“With the ability to update all of the data in a task simultaneously in a single operation, our work offers unmistakable performance and power advantages,” said Sandia researcher Elliot Fuller. “This is projected to improve machine learning while using a fraction of the power of a standard processor and 10 times higher speed than the best digital computers.”

The work demonstrates the fast speeds, high endurance and low voltage critical for low-energy computing, which are becoming more important in such applications as driverless cars, wearable devices and automated assistant technology. As society increasingly relies on these applications for health and safety functions, improved accuracy and speed without reliance on cloud computing becomes critical.

The technology introduces a novel redox transistor approach into conventional silicon processing. The redox transistor — a device that functions like a tiny rechargeable battery — relies upon polymers that use ions to store information, not just electrons as with conventional silicon-based computers.

Future Sandia research will focus on understanding the fundamental mechanisms that govern how redox transistor devices operate, with the goal of making them more reliable, faster and easier to combine with digital electronics. Researchers are also interested in demonstrating larger, more complex circuits based on the technology.