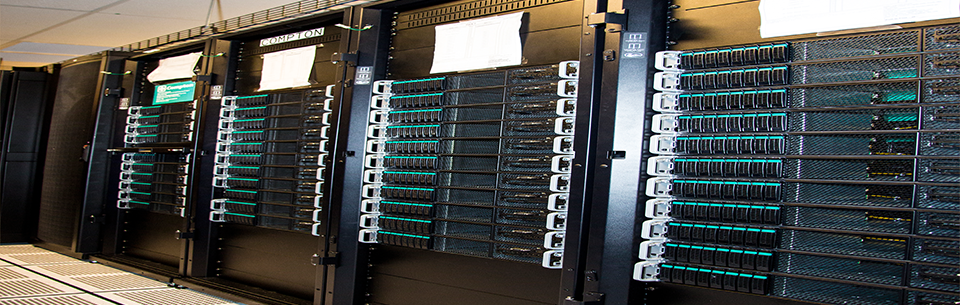

As part of NNSA’s Advanced Simulation and Computing (ASC) project, Sandia has acquired a set of advanced architecture test beds to help prepare applications and system software for the disruptive computer architecture changes that have begun to emerge and will continue to appear as HPC systems approach Exascale. While these test beds can be used for node-level exploration they also provide the ability to study inter-node characteristics to understand future scalability challenges. To date, the test bed systems populate 1-6 racks and have on the order of 50-200 multi-core nodes, many with an attached co-processor or GP-GPU.

The test beds allow for path finding explorations of 1) alternative programming models, 2) architecture-aware algorithms, 3) energy efficient runtime and system software, 4) advanced memory sub-system development and 5) application performance. But that is not all. Validation of computer architectural simulation studies can also be performed on these early examples of future Exascale platform architectures. As proxy applications are developed and re-implemented in architecture-centric versions, the developers need these advanced architecture systems to explore how to adapt to an “MPI + X” paradigm, where “X” may be more than one disparate alternative. This in turn, demands that tools be developed to inform the performance analyses. ASC has embraced a co-design approach for its future advanced technology systems. By purchasing from and working closely with the vendors on pre-production test beds, both ASC and the vendors are afforded early guidance and feedback on their paths forward. This applies not only to hardware, but other enabling technologies such as system software, compilers, and tools.

There are currently several test beds available for use, with more in planning and integration phases. They represent distinct architectural directions and/or unique features important for future study. Examples of the latter are custom power monitors and on-node solid state disks (SSD).

Heterogenous Advanced Architecture Platform Test Beds

| Host Name | Nodes | CPU | Accelerator or Co-Processor | Cores per Accelerator or Co-Processor | Interconnect | Other |

|---|---|---|---|---|---|---|

| Blake | 40 | Dual-Socket Intel Xeon Platinum (24 cores) | None | N/A | Intel OmniPath | Each core has dual AVX512 vector processing units w/FMA |

| Caraway | 4 | Dual AMD EPYC 7401 | Dual-GPU AMD Radeon Instinct MI25 | 64 Cus | Mellanox FDR InfiniBand | Only two nodes have GPUs |

| DodgeCity | 1 | Intel Xeon Platinum | 16x GraphCore IPUs per node | 1472 cores | N/A | Custom Machine Learning accelerator |

| Inouye | 7 | Fujitsu A64FX (48 cores | None | N/A | Mellanox EDR Infiniband | Fujitsu PRIMEHPC FX700 Armv8.2-A SVE instruction set |

| Mayer | 44 | Four ThunderX2 (BO) (28 cores) Forty ThunderX2 (A1) (28 cores) | None | N/A | Mellanox EDR Infiniband (with socket direct) | Small scale Vanguard Astra/Stria prototype |

| Morgan | 8 | Four Dual Intes IvyBridge Five Intel Cascade Lake (24 cores) | Dual Intel Xeon Phi Co-processor | Two 57 core Two 61 core | Mellanox QDR Infiniband | Intel Xeon Phi only available on Ivy Bridge nodes (codenamed Knights Corner) |

| Ride | 4 | Dual IBM Power8 (10 cores) | None | N/A | Mellanox FDR InfiniBand | Technology on the path to anticipated CORAL systems |

| Voltrino | 56 | Dual Intel Xeon Ivy Bridge (24 core) | None | N/A | Cray Aries | Cray XC30m, Full featured RAS system including power monitoring and control capabilities |

| White | 9 | Dual IBM Power8 (10 cores) | Dual Nvidia K40 | 2880 cores | Mellanox FDR InfiniBand | Technology on the path to anticipated CORAL systems |

| Weaver | 10 | Dual IBM Power0 (20 core) | Dual Nvidia Tesla V100 | 5120 cores | Mellanox EDR Infiniband | Technology on the path to anticipated CORAL systems |

Application Readiness Test Beds

| Host Name | Nodes | CPU | Accelerator or Co-Processor | Cores per Accelerator or Co-Processor | Interconnect | Other |

|---|---|---|---|---|---|---|

| Mutrino | 200 | One-hundred Haswell (32 cores) One-hundred Intel Knights Landing (64 cores) | None | N/A | Cray Aries Dragonfly | Small-scale Cray XC system supporting the Sandia/LANL ACES partnership Trinity platform located at LANL |

| Vortex | 72 | Dual IBM Power9 (22 cores) | Quad Tesla V100 GPU per Node | 5120 cores | Mellanox EDR InfiniBand (Full fat-tree) | Small-scale IBM system supporting evaluation of codes to run on the target LLNL Sierra cluster |